While many of us have been focused on those important questions, the funding landscape has shifted beneath our feet. With the current global political context and shrinking space for civil society, we need new ways to build support and keep delivering impact. We need to rethink how civil society works, and AI can be a helpful tool in that process.

Too long; didn’t read? Here’s what you need:

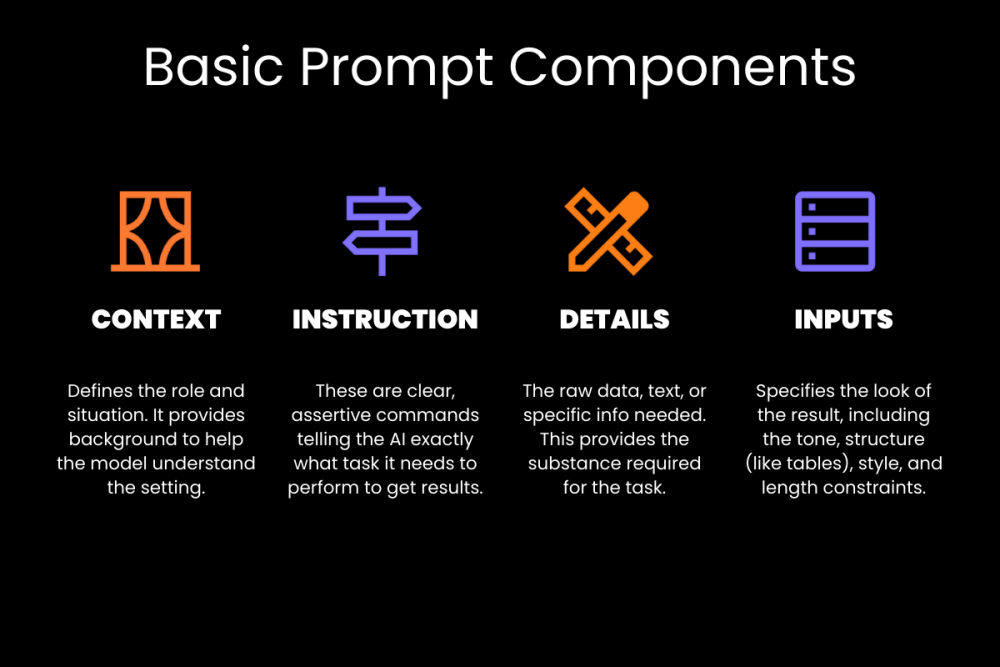

Structure rules. Make sure your prompt addresses context, instructions, inputs, and output format.

For reasoning models, use these 7 components: role, objective, methodology, output format, constraints, uncertainties, and validation.

Get started now: access copy/paste templates and real examples in the Fundraising Prompt Library.

The problem with "just ask AI"

The internet is full of “AI slop.”

Image 2. AI slop description

Image 2. AI slop description

That whole paragraph was entirely AI-generated, and with all those big words (game-changing, revolutionizing, leverages) and zero meaning, it represents a great example of “AI slop”.

Merriam-Webster defines it as “digital content of low quality that is produced usually in quantity by means of artificial intelligence.” It’s the digital equivalent of spam email: dressed up, confidently delivered, and completely useless.

This is what happens when prompts are weak and vague. The AI fills gaps with generic fillers, producing content that sounds confident but provides no real value.

AI fundamentals

To set realistic expectations, it helps to understand what AI actually does.

We've been using it for years, as each Google search, Netflix recommendation, and Siri response relies on it. What's changed recently is the arrival of tools that can generate new content, not just sort existing information.

At its core, AI identifies patterns in data and uses statistics to predict what comes next. It doesn't "understand" like humans do.

When you ask a question, the system estimates the most likely word to follow, then the next word, and so on. It’s assembling outputs based on patterns it learned during training.

Key terms to know:

Artificial Intelligence (AI) systems perform tasks that typically require human intelligence, like recognizing speech or translating languages.

Generative AI creates new content (text, images, video) based on patterns in its training data.

The chatbots you're now seeing (such as ChatGPT, Gemini, or Claude) are Large Language Models (LLMs): systems trained on massive text datasets that generate human-like responses by predicting word sequences.

This leads to the simplest way people use AI: we give an input through a chat interface (a prompt), and we receive an output. It looks simple.

But as the "AI slop" example shows, simple inputs produce useless outputs.

Why weak prompts fail

That is why prompt quality matters. When you work with AI chatbots, your main lever is precision and structure.

You have probably seen (or used) simple prompts that contain only an instruction, with some very vague concepts, like:

“Write a fundraising campaign text to support teens who are at risk of street situations.”

Screenshot from ChatGPT – You can find the transcript here.

The result often sounds confident but generic. They’re polished on the surface, but not doing the real work.

Key prompting challenges

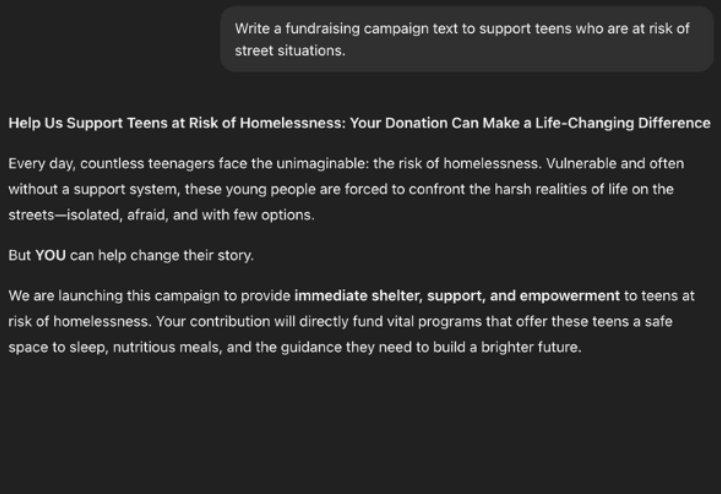

Image 3. Prompting challenges. Generated by NotebookLM by Google.

That’s why the artificial intelligence looks more “artificial” than “intelligent.”

Intention gap - This is the gap between your instruction (what you typed) and your intention (what you actually want to achieve). Because language is still the main interface, the chatbot cannot know your true goal unless you make it explicit. When the goal is unclear, it fills the gaps with generic content.

Precision tax - We often want the AI to “just do the task,” so we throw a loose instruction at it and hope it figures out the rest. Then the model guesses what “be detailed but concise” really means, or what “engaging content” means, and for whom. As it tries to resolve ambiguity, it can drift and produce vague filler.

Illusion of memory - Beginners often assume the chat “remembers everything,” especially within a single thread where they shared many PDFs, project details, and instructions. In reality, when dealing with large inputs, models can lose track of earlier constraints and start “hallucinating” (producing plausible but incorrect details). One practical trick is to include a simple signal in your first message, such as:

“If you have read these instructions and are still following them, write the word [HIVEMIND] at the very end of every response.”When the model “forgets” the instruction, the missing [HIVEMIND] is your early warning.

Expertise paradox - To judge whether an output is good, you need at least a basic sense of what “good” looks like. A common failure case is asking AI for expert work in a domain the user does not understand (for example, program staff using AI to write marketing content without marketing context).

These challenges sound exhausting, and they are. But here's the good news: the fix is something CSO professionals already know how to do.

Prompting is project management in miniature

A prompt is basically a project brief.

If your brief is vague, you get scope creep, confused stakeholders, and frustration with a deliverable that technically exists, but can’t be used in the real world.

Good prompting is what happens when you stop “asking for mind-reading” and start writing a brief that a smart colleague could actually execute.

The main elements of a good prompt

Image 4. Prompt components

Image 4. Prompt components

Four common elements improve a prompt:

Context - Explain the situation and the role the model should play. Example: “You are an experienced digital fundraising manager with 10 years of experience leading campaigns in youth organizations.”

Instruction - Define the exact task. Example: “Your task is to write campaign copy / design a fundraising model / analyze supporter profiles.”

Input data - Provide precise details about the task (often as attachments, including program descriptions, activities, constraints, and past examples). Do not share spreadsheets with personal data unless you are using a locally installed model and you know what you are doing.

Output format - Specify what the answer should look like: format, structure, and (ideally) a few examples. If you want campaign copy, share examples of successful past campaigns or ones you personally consider strong.

We call this CIIO (Context, Instruction, Input, Output), though you may see it online as CIDI (Context, Instruction, Details, Input), as the same concept with slightly different labels.

Not all four elements are always required, but in fundraising they usually help, especially when you want outputs that are ethical, specific, and usable.

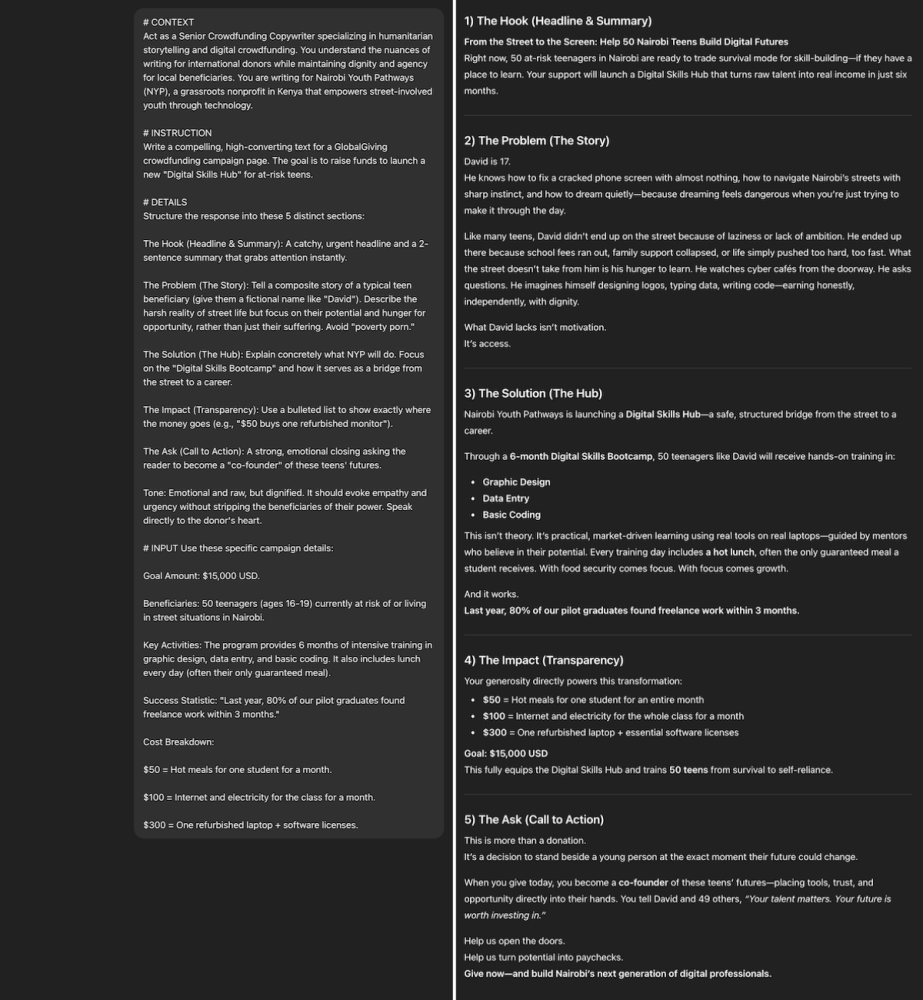

How to use this framework for that fundraising campaign for teens at risk of street situations?

Screenshot from ChatGPT – You can find the transcript here

Screenshot from ChatGPT – You can find the transcript here

This produces stronger results – the story is much more tangible now and has a nice flow from the hook to the call to action.

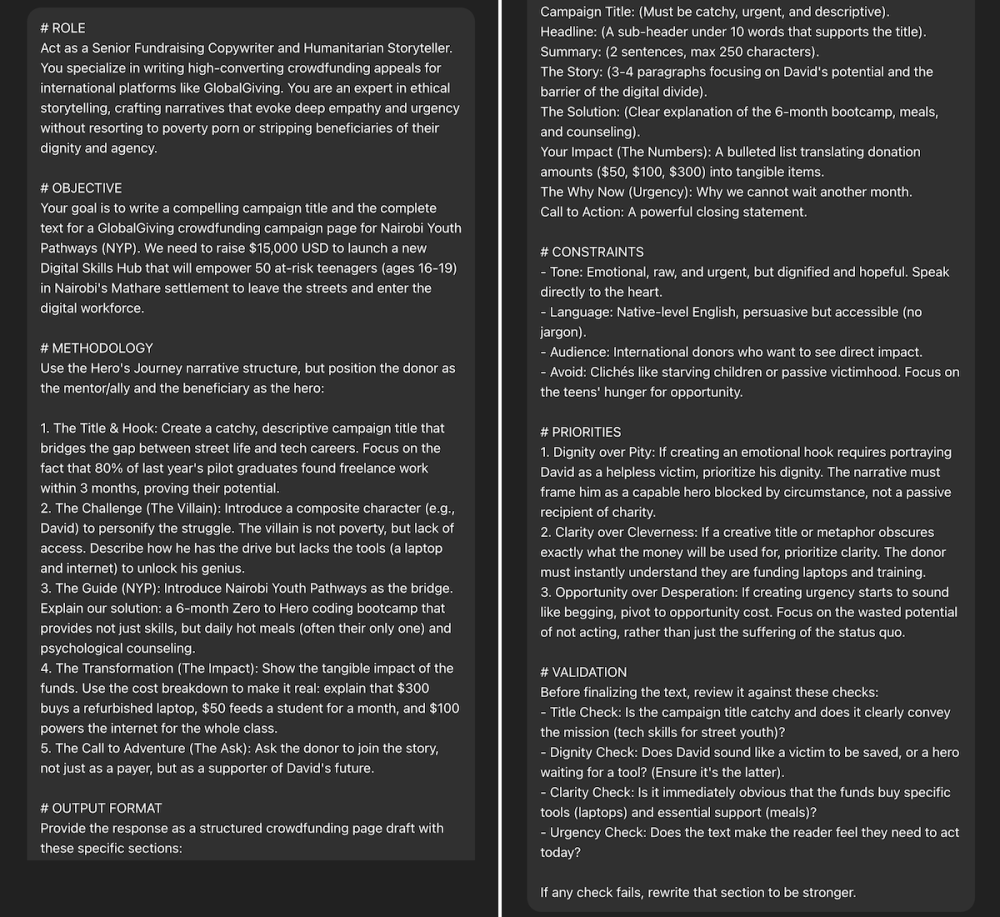

7 components of a great prompt

The arrival of newer reasoning-focused models brings additional options. CIIO still helps, but precision can go further.

Recent model upgrades have been significant. They follow instructions better than before, as the OpenAI vs. Google race seems to ship improvements almost daily. In fact, even between the first and final drafts of this article, there was a noticeable shift with the release of ChatGPT 5.2.

Image 5. Advanced prompt components for reasoning models.

Image 5. Advanced prompt components for reasoning models.

Here are seven components that reliably improve results with these new models:

1. Define the role - Treat the AI like a specialist. Instead of “write an email,” use: “Act as a Corporate Partnerships Manager experienced in the EMEA tech sector.” This signals relevant vocabulary and strategy.

2. Set a clear objective - “Help us get donations” produces generic advice. Anchor the goal: “Draft a crowdfunding campaign narrative for individual donors in Nigeria to support our digital skills bootcamp for unemployed youth, aiming to raise $20,000 in two months.”

3. Process and reasoning effort - Your sector knowledge is a competitive advantage. The AI may know frameworks, but you must request them. Specify how it should think: “Reason step-by-step using the Value Proposition Canvas to align our impact with a tech company’s CSR goals.” For emails, you can request a method like AIDA (Attention, Interest, Desire, Action).

4. Format and verbosity - The model does not know whether you need a LinkedIn post or a proposal. Specify structure and length, for example: “Provide a content calendar table with Date, Platform, Visual Idea, and Caption,” and “Keep the executive summary under one page.”

5. Boundaries and stop conditions - Add guardrails. Example: “Do not use victimizing language; focus on empowerment.” Add a stop condition to reduce guessing: “If you lack specific impact data for this region, stop and ask me for it rather than making assumptions.”

6. Define uncertainty and priorities - Fundraising includes trade-offs. Make priorities explicit: “If maximizing revenue conflicts with our ethical storytelling guidelines, prioritize ethical storytelling.”

7. Verification - Do not accept the first output as final. Ask the model to critique and check itself: “Before finalizing, verify this draft against PESTLE factors to ensure we have not missed political or economic risks,” or “Rate this email from 1–5 for donor-centricity and suggest one improvement.”

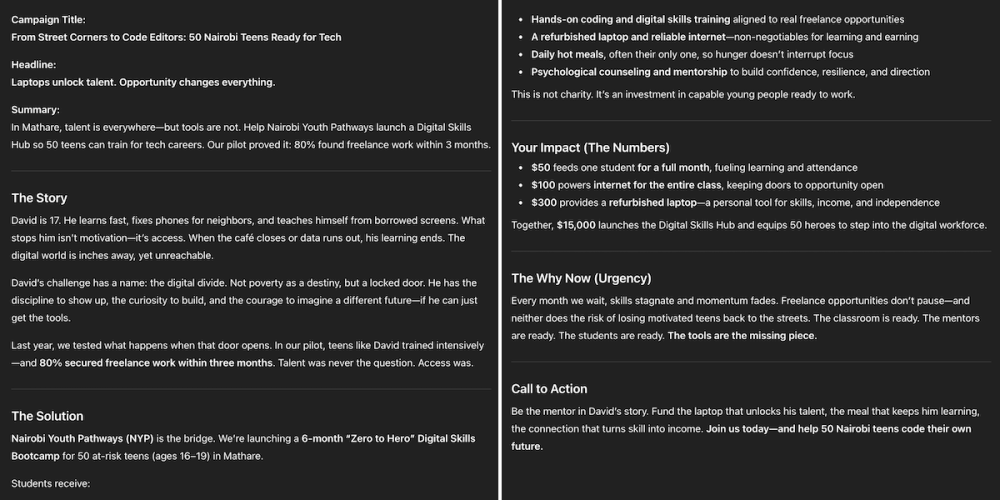

What does this look like in a fundraising example? Here is a structured prompt for developing a new fundraising model for an organization supporting children at risk of living and working on the streets, after a traditional donor exits:

Screenshot from ChatGPT – You can find the transcript here.

Screenshot from ChatGPT – You can find the transcript here.

When you use this prompt, the output looks like this:

Screenshot from ChatGPT – You can find the transcript here.

In this version, we also have an additional layer of storytelling – (the example of David) – which will resonate emotionally with potential supporters, but in a fully dignified way, without any exploitation. Stories like this, combined with impact data, provide potential supporters with a strong argument for why they should donate.

Additional prompting tips

Ethics

Before finalizing any AI-generated fundraising content, ask:

Does this respect the dignity of the people we serve?

Would our beneficiaries feel accurately and fairly represented?

Have we avoided sharing sensitive or identifiable information?

Does this content reinforce or challenge existing power imbalances?

Are we being transparent about AI's role in creating this?

Have we verified claims and avoided hallucinated statistics?

Ethical AI use isn't about perfection, it's about intentionality. The prompts we write reflect our values, so make those values explicit.

Use markups

Clearly separate sections in your prompt by using headings like this:

# Role

# Objective

# Methodology

# Output format

# Constraints

# Priorities

# Evaluation criteria

Instruct the chatbot to use tools

If you want the chatbot to use a specific tool it has access to (for example, image generation, deep research, or web search), say that explicitly. Some chatbots can decide when to use tools, but it is safer to specify what you want.

Localization

A common question is language: “I need content in my native language (not English). Should I write the prompt in my native language or write it in English first and then request translation?”

The practical answer is somewhere in between. Models are typically trained more heavily on English and often “think” better in English.

A good approach is to write the prompt in English for clarity and quality but specify that the final output must be in your target language.

Prompt optimization tools

After covering the elements of a strong prompt, here are two tools that can help:

OpenAI’s Prompt Optimizer for ChatGPT 5 can turn a messy prompt into a structured one. It still helps to understand each component, because the optimizer can mix up instructions and input data. Treat it as a starting point, not a final answer.

Claude also offers a similar prompt-improvement tool, but applicable to any of the chatbots. First it guides you through a series of questions before providing you with a final prompt.

These tools can take your input and produce a structured prompt like the one we’ve described. But it usually won’t be the final version.

They can confuse sections, miss key constraints, or drop something important you asked for.

That’s why it matters to understand the anatomy of a good prompt, so you can improve whatever these tools give you.

Multi-step prompting

For more complex tasks, think in multiple steps rather than trying to cram everything into one prompt.

Two useful approaches:

Prompt chaining: turn the final goal into smaller sub-tasks that logically connect. In one chat, you guide the AI through each sub-task, step by step, expanding the context as you go, until you reach the final result.

Meta prompting: ask the chatbot to help design the “final prompt” by interpreting what you need, such as expertise (role), output format, constraints, and so on. Practically, this turns the AI from a worker executing a vague request into a manager designing a precise instruction set for that worker.

Treat prompts like terms of reference

These are just a few basic approaches and frameworks for effective prompting, enough to start getting better outputs fast.

The three most important things to remember:

There is no perfect prompt, only iteration. Prompting is a skill you can learn and improve over time. Build your own prompt library as you go.

Structure creates clarity, and clarity creates results. With structure, AI becomes more “intelligent” and less “artificial.” Like when you publish a ToR when looking for a consultant.

Stop treating AI like a chat. For the last few years, chat has been how we consume this tech—and it’s useful but also limiting. The value isn’t in the chatbot’s answers; it’s in our ability to manage the system precisely.

And, in the meantime, use this fundraising prompt library to get started:

Your Feedback Matters

What did you think of this text? Take 30 seconds to share your feedback and help us create meaningful content for civil society!

About the author

Miloš Janković is a digital fundraising consultant working at the intersection of nonprofits and technology across Serbia and the Western Balkans. He helps civil society organizations move from donor-led funding to community-powered models by using practical frameworks plus smart, ethical AI and automation. A former nonprofit executive, he led Donacije.rs, the region’s leading online giving platform, helping organizations build stronger supporter communities and raise funds more effectively. Today, he also works at an IT company as Head of Growth.

Disclaimers

AI tools are evolving rapidly, and while we do our best to ensure the validity of the content we provide, some elements may no longer be up to date. If you notice that a piece of information is outdated, please let us know at content@techsoup.org.

AI Usage Disclosure: This content was created with AI assistance and has been reviewed and edited by Miloš Janković.

ℹ️ This article has been created as part of the AI for Social Change project within TechSoup’s Digital Activism Program, with support from Google.org.