If you're holding multiple, contradictory feelings, you're not alone. And here's what I've learned after guiding organizations through responsible AI journeys: that tension isn't a problem to solve. It's wisdom. It's the starting point for everything that follows.

The Tension Is Real - And It's Telling You Something

Civil society organizations are feeling the pressure. Funders are asking about your AI strategy. Your team is already using tools you haven't formally approved. The technology is moving faster than your policies. And underneath it all, there's a persistent question: How do we use these powerful tools without losing what makes us who we are?

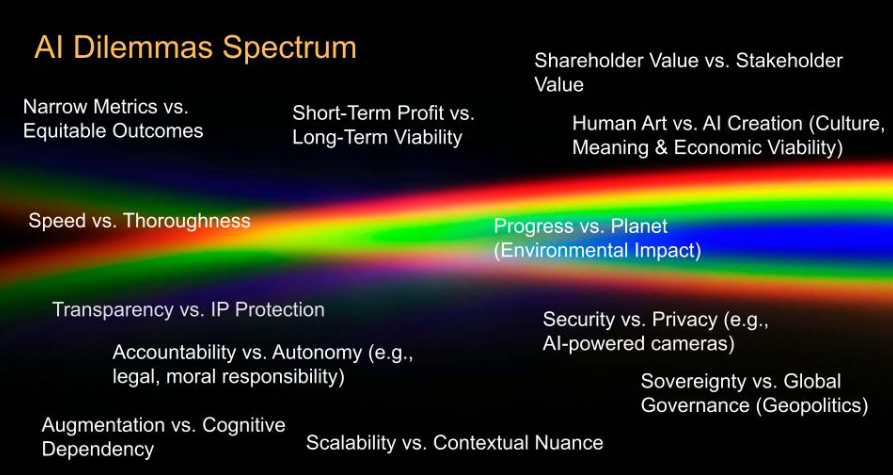

This tension shows up in specific dilemmas:

Efficiency vs. authenticity: AI can help us do more, but does "more" serve our mission or just fill our calendars?

Innovation vs. safety: We want to be forward-thinking, but not at the cost of the communities we serve.

Access vs. privacy: AI could help us reach more people, but what data are we trading to get there?

These aren't questions with clear answers. They exist on a spectrum, and where your organization lands will be different from where another lands. That's not a weakness. It's the nature of values-based work.

Figure 1. AI Dilemmas Spectrum. Illustration showing examples of various dilemmas CSOs face when using AI, e.g. narrow metrics vs. equitable outcomes, human art vs. AI creation (culture, meaning & economic viability), sovereignty vs. global governance (geopolitics). Illustration by Aysegül Güzel Turgut. License: CC BY 4.0 Int.

Figure 1. AI Dilemmas Spectrum. Illustration showing examples of various dilemmas CSOs face when using AI, e.g. narrow metrics vs. equitable outcomes, human art vs. AI creation (culture, meaning & economic viability), sovereignty vs. global governance (geopolitics). Illustration by Aysegül Güzel Turgut. License: CC BY 4.0 Int.

The discomfort you feel? It's a signal. It's pointing you toward what you actually care about.

Why CSOs Should Lead, Not Follow

Here's what the tech industry often gets wrong: the moment an algorithm enters the real world, sorting job applications, recommending content, allocating resources, it stops being a purely technical system. It becomes entangled with human relationships, institutional power, and community trust.

These are not engineering problems. They're the problems civil society organizations have been navigating for decades.

You already know how to hold complexity. You know how to listen to marginalized voices. You know how to make values-based decisions under uncertainty, with limited resources, in contexts where the stakes are deeply human.

The AI governance conversation needs exactly this expertise.

And yet, too often, CSOs wait on the sidelines, assuming this is a conversation for technologists, for corporations, for regulators. The risk of waiting is real: others will define the rules, and those rules may not reflect your values or the needs of the communities you serve.

The opportunity is equally real: civil society can shape how AI is developed, deployed, and governed, not just react to decisions made elsewhere.

This isn't about becoming AI experts. It's about claiming your seat at the table as values experts.

Tensions as Doorways to Principles

So how do you move from tension to clarity?

The answer isn't to resolve the tension. It's to name it. Your tensions reveal your values. And your values, once articulated, become your principles.

Consider an education nonprofit, the Khan Academy . When they introduced Khanmigo, their AI-powered learning tool, they didn't start with technical specifications. They started with tenets, principles like "Achieving Educational Goals," "Learner Autonomy," and "Transparency & Accountability." These tenets emerged from a clear-eyed view of what could go wrong and what they wanted to protect.

For their tenet on “Achieving Educational Goals,” they developed specific guardrails: "There are mechanisms in place to prevent non-educational uses of the AI." When they assessed the risk of inappropriate use, they rated it high and built mitigation strategies accordingly, from moderation systems to parental notifications to clear terms of service.

The principles came first. The practical protections followed.

Similarly, consider an international network supporting social entrepreneurs, ChangemakerXchange (CXC). Their AI journey began with honest conversations about tension. As their Mindful AI Manifesto states: "We are deeply critical, especially of Generative AI, recognising its risks: the immense environmental toll... its tendency to amplify bias... and its potential to flatten the unique human creativity our work depends on."

From that honest reckoning, they developed principles, such as "Mindful Use": so that it sounds as follows: "AI is not the default. Before every potential use, particularly with generative AI, we try to pause and ask ourselves: Is AI really needed for this task? Is it really aligned with our values? What do I lose when I use this tool?"

Both organizations started with the same question: What do we care about protecting? Their principles are different because their missions are different. Yours will be too.

You're Not Starting from Zero

If defining AI principles sounds daunting, here's reassuring news: a global community has been working on this for years.

Since 2019, organizations like the European Commission, the OECD, UNESCO, and the National Institute of Standards and Technology (NIST) have developed frameworks for trustworthy AI. These are the common principles that have emerged from their work:

Human agency and oversight - humans remain in control of crucial decisions

Privacy and data governance - personal data is protected and used responsibly

Transparency - people understand how AI systems work and affect them

Fairness and non-discrimination - AI doesn't perpetuate bias or create unjust outcomes

Accountability - there's clear responsibility for the decisions and their consequences

Societal and environmental wellbeing - AI should benefit people and communities while minimizing harm to both society and the planet

These frameworks are a starting point, not a template to copy. The work isn't adopting someone else's principles. It's discovering which principles matter most to your organization, in your context, for your communities.

A principle like "fairness" means something different depending on your mission. For an organization serving refugees, fairness might mean ensuring AI translation tools don't perform worse for less-common languages, or that automated screening doesn't disadvantage applicants from conflict regions. For an environmental advocacy organization, fairness might mean ensuring AI-driven campaign targeting doesn't exclude low-income communities who are most affected by pollution but least likely to be identified as "high-value donors" by an algorithm.

The word is the same. The application is yours to define.

Why Diverse Voices Matter

One of the most common mistakes organizations make is treating principle-setting as a leadership exercise. A small group drafts a document, circulates it for comments, and calls it done.

This approach produces principles that look good on paper but don't live up to it in practice. The people closest to your mission, such as program staff, community members or frontline workers, often see risks that leadership misses. They also have wisdom about what organization truly values, not just what it aspires to value.

CXC's Manifesto emerged from deep listening across their team. Khan Academy's framework is maintained by a cross-functional working group including product, data, and user research teams, ensuring diverse perspectives shape their approach.

The process of creating principles is as important as the principles themselves. When people participate in naming what matters, they become guardians of those values, not just recipients of a policy memo.

Principles That Live and Breathe

Here's a truth that might bring relief: the first draft will not be perfect. It doesn't need to be.

Responsible AI principles are living documents. They evolve as technology changes, as your understanding deepens, as you learn from what works and what doesn't.

CXC commits to reviewing their AI documents every six months. Khan Academy continuously evaluates their framework through demos, feedback loops, and stakeholder engagement. Both organizations treat their principles not as finished products but as ongoing conversations.

This doesn't mean principles are vague or uncommitted. It means they're grounded in reality, responsive to a technology landscape that shifts constantly.

Aim for "version one," not "final version." The goal is to begin, not to be perfect.

An Invitation to Begin

If your organization hasn't yet articulated its AI principles, this is your invitation to start.

Not with a policy document. Not with a compliance checklist. But with a conversation.

Gather your team. Create space for honesty, for people to name their hopes, their fears, their tensions. Ask: What are we trying to protect? What do we refuse to compromise? What does responsible AI look like for an organization like ours?

The answers are already in the room. Your work is to surface them, name them, and commit to them together.

This is leadership work. And civil society organizations are built for it.

Ready to Take the Next Step?

Make sure to regularly check back to Hive Mind throughout the next eight weeks in order to find more articles in the series and in-depth guides on responsible use of AI for the CSOs.

To help you move from inspiration to action, we've created a practical companion to this article that will be published next week: "Creating Your CSO's Responsible AI Principles: A Step-by-Step Guide."

The guide will walk you through the complete process:

How to surface tensions and map your current AI landscape (the LOOK phase)

How to identify and prioritize principles using the Foundational Value Finder framework

How to define principles in your own context with the 4 Defining Questions

A 90-minute workshop agenda you can use with your team

Downloadable worksheets and templates to support your journey

Whether you're a small advocacy nonprofit or a large international NGO, the guide will provide a clear pathway from "we should do something about AI" to "here are the principles that will guide us."

Resources & Further Reading

Khan Academy's Framework for Responsible AI in Education (https://blog.khanacademy.org/khan-academys-framework-for-responsible-ai-in-education/) - How an education nonprofit turned tenets into practical guardrails

ChangemakerXchange Mindful AI Manifesto & Policy (https://changemakerxchange.org/mindful-ai/#manifesto) - A values-driven approach from a global changemaker network

Practical Guide: Creating Your CSO's Responsible AI Principles - Step-by-step instructions and worksheets (accompanying this article) with PART 1: LOOK - Preparing the Ground, out now!

Next in this series: AI Governance Structures - defining who holds the conversation and how decisions get made

This article is the first in a series, "The Responsible AI Roadmap for CSOs," developed as part of the AI for Social Change project within TechSoup’s Digital Activism Program, with support from Google.org.

About the Author

Ayşegül Güzel is a Responsible AI Governance Architect who helps mission-driven organizations turn AI anxiety into trustworthy systems. Her career bridges executive social leadership, including founding Zumbara, the world's largest time bank network, with technical AI practice as a certified AI Auditor and former Data Scientist. She guides organizations through complete AI governance transformations and conducts technical AI audits. She teaches at ELISAVA and speaks internationally on human-centered approaches to technology. Learn more at https://aysegulguzel.info or subscribe to her newsletter AI of Your Choice at https://aysegulguzel.substack.com.