What LOOK produces:

Surfaced tensions (through interviews or reflection)

An initial AI inventory (what tools are in use, what data they touch, what concerns exist)

A collection of existing wisdom (regulations that apply, your organization's existing values, benchmarks from the field)

All of this becomes raw material for the CREATE phase.

1.1 Start with Tensions

The most powerful starting point is acknowledging the complexity your team is already feeling. AI exists in a space of creative tension; efficiency vs. authenticity, innovation vs. safety, access vs. privacy. These tensions aren't problems to solve; they're doorways to your values.

Two approaches:

Option A: Pre-session interviews

One person conducts brief interviews with team members before the group session. Ask the 5 Questions individually, listen for patterns, and present synthesized insights to the group during the CREATE session. It’s about deep listening before collaborative creation. This approach works well when:

You want to surface honest concerns people might not share in a group

You have diverse stakeholders with different perspectives

You want the CREATE session to focus on synthesis rather than initial discovery

Option B: Live during the session

Gather the team and work through the 5 Questions together during the CREATE session. Allow time for individual reflection before group discussion. This works well for smaller teams or when building shared understanding is as important as the content itself.

This approach works well when:

Your team is small and psychologically safe

Shared discovery is part of the goal

Time for pre-work is limited

The 5 Questions:

Whether gathered beforehand or explored live, these questions surface the raw material for your principles:

1. Our Relationship with AI

As a team, what emotions come up when we think about AI?

Don't skip this. Are people excited? Anxious? Curious? Overwhelmed? Skeptical? Name the feelings. Write them down. Let them be complex and contradictory. This isn't therapy, it's context. You can't have a meaningful conversation about principles if you don't understand the emotional landscape you're operating in.

2. Our Hopes

What is the most positive outcome we hope to achieve with AI?

What becomes possible that wasn't possible before? What problems could we solve? What could we do better, faster, or with greater reach? Let people dream here. This is the "north star" energy, the pull toward what you want to build.

3. Our Fears

What is the harm we are most committed to preventing?

If everything went wrong, what would that look like? What's the outcome that would make you say, "We should never have done this"? What's your red line, the boundary you absolutely will not cross? Can you describe a specific use of AI that would feel fundamentally wrong for an organization like ours, even if it were technically effective? What would cross an ethical line?

This is the "guardrail" energy, recognition of what you need to protect against.

4. Our Non-Negotiables

Looking at our existing organizational values, which one is most critical to uphold as we adopt AI?

Most organizations already have stated values. Which of those feels most at risk in an AI context? Which one must guide everything else?

What part of your work should always remain fully human, where AI assistance would diminish rather than enhance what you do? What should be "firewalled" from automation?

This connects your AI principles to your organizational identity.

5. Our North Star

If we could choose only 3-5 principles to guide our AI work, which would they be and why?

Plant this seed early. You'll return to it with more information in the CREATE phase.

What to capture:

Document everything: the specific tensions people name, the fears, the hopes, the patterns. When certain phrases or words recur, like "authenticity" or "community trust", that's a signal pointing toward your principles.

1.2 Begin Your AI Inventory

When to do this: Before the group session, if possible. One person or small group can draft the initial inventory, then validate and expand it with the full team during the CREATE session.

→ Use the AI Inventory sheet in the Identify | Define AI Principles Worksheet to capture your organization's AI tools and concerns.

Why this matters:

Makes the conversation concrete (not abstract principles, but "we're using these tools")

Reveals tensions organically (the inventory surfaces where the organization feels uncertain)

Provides raw material for principles identification (the tools and their risks inform which principles matter most)

Uncovers "shadow AI" - tools team members are using informally without organizational approval

Inventory structure:

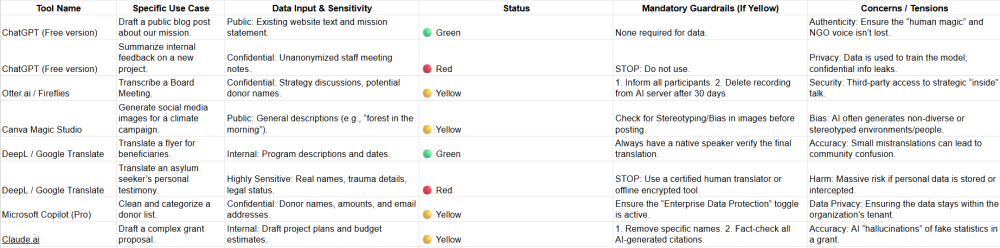

Figure 1: The content of this table is an example, you can find it and the editable table here

Figure 1: The content of this table is an example, you can find it and the editable table here

Important: An AI tool does not have a permanent status. The status is a result of the Tool + Use Case + Data Sensitivity. Always evaluate the specific task before starting.

Status categories:

🟢 Green: Permitted

🟡 Yellow: Use with caution, allowed BUT only if you follow specific guardrails

🔴 Red: Prohibited. Do not use.

⚪ Grey: Wishlist / Exploratory phase.

Data sensitivity levels:

Public: No concerns about exposure

Internal: For organizational use only

Confidential: Limited access, contains sensitive organizational information

Highly Sensitive: Contains personal data, beneficiary information, or legally protected content

Facilitation tip: When gathering inventory information, create safety for honest disclosure. People often use AI tools they haven't mentioned because they're unsure if it's "allowed." Anonymous input mechanisms can help surface shadow AI use.

Keep this inventory as a living document. It will grow more detailed as you develop governance structures and policies in the CREATE phase.

→ The AI Inventory sheet in the worksheet is designed to be updated over time. Start simple; add detail as your understanding deepens.

1.3 Gather Existing Wisdom

When to do this: Before the group session. One person can compile this information and present it during the CREATE meeting, ensuring the team has a shared foundation to work from.

What to gather:

Your organization's existing values and principles

Mission statement, strategic plan, code of ethics

Any existing technology or data policies

Values statements from your founding documents

Commitments made to funders, partners, or communities

Ask: How might these existing values apply to AI?

Your regulatory landscape

What regulations apply to your context? (EU AI Act, GDPR, sector-specific requirements)

What do funders expect regarding AI use or data protection?

What does your community expect?

Are there professional standards in your sector?

For European CSOs, key EU AI Act principles include human oversight, transparency, data quality, and non-discrimination. Even outside the EU, these frameworks offer useful reference points.

→ See the EU AI Act & Principles Alignment (Most Common Responsible AI Principles sheet) in the downloadable worksheet (Identify | Define AI Principles Worksheet) to understand which principles have regulatory backing.

Benchmarks from the field

A global community has been developing Responsible AI principles since 2019. You don't need to research all of this yourself. We've compiled the most relevant frameworks and examples for you.

→ See the Responsible AI Principles Benchmarks resource for examples from mission-driven organizations including Khan Academy, ChangemakerXchange, Common Sense Media, and others.

→ See the Most Common Responsible AI Principles Sheet in the downloadable worksheet (Identify | Define AI Principles Worksheet) for definitions of the most-cited principles across EU, OECD, UNESCO, and NIST frameworks.

Output:

A compiled document or brief presentation that brings this wisdom to the CREATE session:

Summary of regulations relevant to your context

Your organization's existing values (with notes on AI relevance)

The common principles reference sheet (provided)

1-2 examples from similar organizations that resonate with your mission

The goal isn't to become an expert in every framework. It's to have enough context that your team can make informed choices about which principles matter most for your organization. The resources we provide do the heavy lifting. Your job is to bring your organization's unique perspective.

Make sure to come back to Hive Mind next week for Part 2: CREATE - Identifying and Defining Your Principles, to learn how to identify AI principles that matter most for your CSO.

________________________________________________________________________________________________________________________________________________

This article is the first in a four-part series, "The Responsible AI Roadmap for CSOs," developed as part of the AI for Social Change project within TechSoup’s Digital Activism Program, with support from Google.org.

About the Author

Ayşegül Güzel is a Responsible AI Governance Architect who helps mission-driven organizations turn AI anxiety into trustworthy systems. Her career bridges executive social leadership, including founding Zumbara, the world's largest time bank network, with technical AI practice as a certified AI Auditor and former Data Scientist. She guides organizations through complete AI governance transformations and conducts technical AI audits. She teaches at ELISAVA and speaks internationally on human-centered approaches to technology. Learn more at https://aysegulguzel.info or subscribe to her newsletter AI of Your Choice at https://aysegulguzel.substack.com.