Despite several attempts to create tools that help to be protected from deepfakes, we still do not have an effective tool to rely on for detecting them, because we have seen that those tools have failed in some cases, as a recent study by Reuters Institute is showing. Therefore, it is crucial to develop our critical thinking when we are being exposed to audiovisual content in different media sources. We need to be more media literate, and this can be achieved in different ways, including learning more about the media, understanding the basics how media operate, distinguishing facts from opinions, and so on. Different trainings and workshops have been organized to help people to become more media literate – there is even a self-paced free course available on Hive Mind.

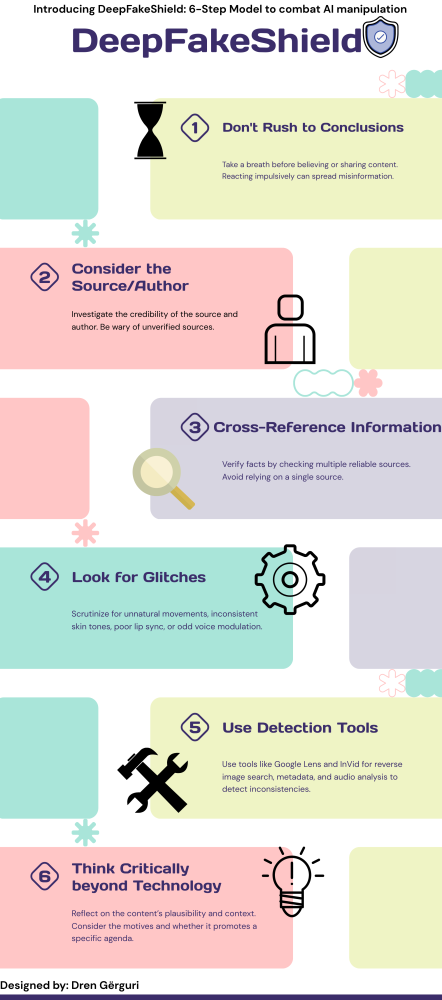

In this text, I will present my model for defending yourself from deepfakes, DeepFakeShield in Six Steps.

1. It is very important not to rush to conclusions. For example, if you watched a video on social media of a politician making a controversial statement, do not believe it immediately – reacting impulsively can lead to mistakes! Thus, always take a breath before believing a priori in the content you are seeing or hearing, or sharing without realizing that you are spreading manipulated information.

2. Always consider the source/author. When evaluating information, it is crucial to consider the credibility of the source and author. So, always ask yourself, is it from a recognizable media channel or an unknown social media account? Be cautious of information from unverified sources, such as social media pages or groups, and always try to identify the original author to make sure the information is reliable and trustworthy. Unverified sources are often the disseminators of disinformation and deepfakes.

3. Search by cross-referencing across multiple online platforms and media outlets. If you are seeing that video of a politician in a social media, check if credible news media are reporting the same story. Relying on a single source can make you more vulnerable to disinformation. Therefore, verify information by seeking out diverse sources before analyzing and forming an opinion.

4. Look for glitches. Deepfakes are evolving, however there are still some glitches that our eyes or ears can catch. Therefore, it is important to analyze the face, skin color, lip synchronization, background, unnatural pauses, or fluctuations in voice pitch. To continue with our example of the video watched on social media, investigate the video closely for unnatural movements, inconsistent skin tones, poor lip synchronization, odd background elements, or unusual voice modulation. While deepfakes can be extremely convincing, they often contain small errors. Thus, it is important to pay attention to details, because these glitches can be unnoticeable if you are not careful.

5. Use some tools that can be helpful in detecting deepfakes in various ways, such as the Reverse Image Search with Google Lens to identify the source of an image or video and see where an image or a video first appeared, and whether it has been altered. The Metadata Analysis and Audio Analysis using the InVid allows you to identify inconsistencies or anomalies in data, or discrepancies in audio tracks. Not using these tools can leave you vulnerable to believing manipulated content. For any suspicious video that you have seen, it is important to verify its authenticity and using multiple tools will help you to get a thorough analysis of the content.

6. You need to think beyond the technology and critically evaluate the content. Ask yourself: does this seem too good (or bad) to be true? Consider the broader context and assess whether the information aligns with established facts, events, and expert knowledge. Think about the motivations behind the content and whether it seems to be promoting a particular agenda or narrative. There is always a goal behind every deepfake, often tied to larger political, economic, or ethical arguments. Deepfakes can be used in politics to spread disinformation and to harm important social processes, such as elections. A deepfake may be created with an intention to influence elections by manipulating the public opinion, as it happened in Slovakia this year, or in the US last year. The deepfakes could be also economically motivated, and there are already various examples where people were manipulated, like the engineering group which transferred $25mln to fraudsters who had used a deepfake of a senior manager during a video conference in Hong Kong. Of course, deepfake could be used for entertainment too, as a seemingly harmless content. However, it is important to understand these underlying goals and why do deepfakes exist in the first place.

By adopting the DeepFakeShield in Six Steps model, we may create a strong barrier against manipulations by Artificial Intelligence. We may lessen the chance of being misled or tricked by taking the time to double-check information, and by researching the content and considering its context thoroughly. We can protect ourselves from the negative consequences of deepfakes, and guarantee a safer and better-informed digital environment.

Want to become more resilient to disinformation? Register for our online self-paced course on Media Literacy!

📖 If you wish to learn more and get access to a set of useful tools, download our FREE TechSoup Guide on Digital Tools for Countering Disinformation: https://bit.ly/CounteringDisinformationToolsGuide

Background illustration by Fantastic