AI as a tool for data analysis and narrative shaping

The impact of AI in our daily lives is evident. One of its most profound impacts is observed in the context of information warfare. AI is used to analyze vast amounts of data, and as an important tool in shaping and influencing narratives. This article explores how AI is used in the war in Ukraine to manipulate public perception and opinion.

AI tools are very effective to collect and analyze data from online sources. Moreover, their usage enables people to influence how narratives are constructed and shared. AI can detect patterns and trends, and can play a crucial role in shaping perception and opinion - for instance, by framing and distorting. Russian military officials said in 2018 that AI would help Russia fight threats online and win cyber wars. Since then, the Russian government has used various AI tools, including pro-Russian bots. Research on digital propaganda and conflict rhetoric showed how AI bots played an instrumental role in amplifying pro-Russian narratives, undermining NATO and Ukraine's credibility. The same tools can be used to counter these attacks and for this purpose, Palantir, a technology company, has provided its AI and data analysis software to help Ukraine’s military. Also, major tech companies like Microsoft, Amazon, Google, and Clearview AI started to support Ukraine with cyber defense, cloud services, and other tools.

The misuse of AI for disinformation and psychological operations

AI tools can significantly contribute to the creation and dissemination of disinformation. AI-generated propaganda, such as deepfakes, has been used to frame events in ways that serve specific interests. By highlighting certain aspects, the viralization of false narratives aims to sway public opinion in a certain order. One notable instance occurred in March 2022 when a deepfake video of the Ukrainian president Volodymyr Zelenskyy appeared online. This deepfake, despite being debunked, was used in a research article that studied how people react to deepfakes on social media. The results showed that people had different reactions to different kinds of deepfakes. Including the deepfake of Zelenskyy surrendering in the study, showed that it provoked shock, worry, and confusion among viewers.

AI-generated fake profiles in social media are part of another strategy to accelerate the spread of false narratives. One of the Russian propaganda techniques is to sow confusion and use AI bots to make viral content and influence people’s perception on specific topics. This type of coordinated manipulation has created different false narratives. AI-driven bots were used to disseminate false information and conspiracy theories on social media platforms related to the war, such as the conspiracy theory about developing biological weapons by Ukraine with alleged support from the United States.

Russia started to use AI-powered psychological operations (PSYOPs) to influence both Ukrainians and the international community. These campaigns have been ongoing since 2014, focusing on historical ties and shared culture to weaken support for Ukraine's sovereignty and promote a favorable view of Russia.

The challenges of detecting deepfakes and the DeepfakeShield Model

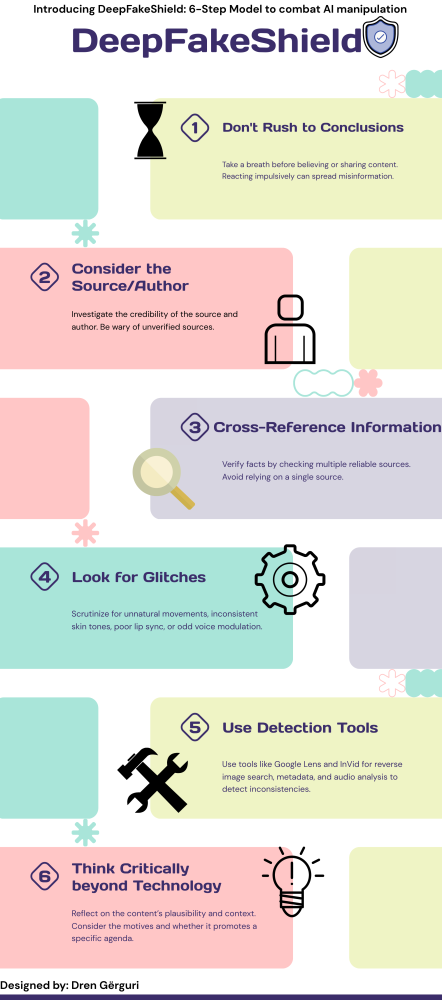

The sophistication of AI-generated content is improving, making it harder to distinguish when the information is real and fake, pushing people to have a general distrust towards media and institutions, and to reject even reliable sources of information. Having an effective AI detection tool is still a crucial challenge, as the existent tools still have some imperfections and in some cases, they have already failed by being incorrect in the analysis. In this situation, the DeepfakeShield in Six Steps model is an appropriate solution to protect ourselves from AI manipulations, and have a safer and better-informed digital environment.

Background illustration: Shutter2U