Efforts to fight misinformation and promote media literacy have motivated the development of several models for verifying information, which focus on news articles. The most popular ones include the CRAAP test and the IFLA model (International Federation of Library Associations and Institutions) – which is often distributed in the form of an infographic available here.

However, a substantial part of information that circulates in the public sphere does not come from traditional news media and online outlets associated with them, but is spread through text messages or videos posted on social media. Such messages have specific features, which means that the models used to analyze them needs to be updated. A specific model for this kind of content could standardize analysis of misinformation, as well as be included in media literacy programs.

Particularities of social media communication

When verifying articles published through online outlets, it is necessary to look for information about the outlet itself (e.g., does it have a bias, check the sources it quotes, find out about the author). In the case of social media content, a significant part of misinformation is driven by known influencers. Their sources are usually varied and not cited directly, since most social media posts are opinions based on personal convictions and information that has accumulated over time.

Another characteristic of social media content is the tone or style in which information is presented and which has similarities regardless of whether it is a text or audio/video material. Social media communication is more colloquial than traditional media and does not necessarily have a typical structure (title, lead, structured content). Moreover, influencers, who represent a sizeable part of the media sphere, create their own styles, often using sarcasm to emphasize their key messages and to build a personal brand. Such an informal style (eg. using capital letters, colloquialisms, or sarcasm) can be a marker of misinformation for news articles, but less so for social media content.

A model that would help verify social media content needs to take these characteristics into account. Moreover, it needs to be systematically applied, in order to be useful in countering misinformation, propaganda, and conspiracy theories.

One such model is – NALF, which stands for:

Narratives - known false narratives,

Appeal to emotions,

Logics - errors in reasoning,

Facts – altered or invented.

Let’s go through each of the terms below:

Known false narratives

Misinformation agents never reconsider their position on topics of interest. Rather, they tend to act as ifthe narratives they promote have already been proven and only propagandists, who defend “the system”, refuse to accept it.

In reality, many false narratives have been thoroughly debunked either by media organizations that have fact-checking departments, or by organizations specializing in verifying the accuracy of media content. For example, we know for certain[1] that COVID vaccines do not contain chips and don’t cause waves of deaths, and that extreme weather events and earthquakes are not caused by HAARP. References to such narratives (and others) can be considered markers of misinformation.

Projects focused on analyzing misinformation and on raising media literacy need to be efficient (i.e. not taking much time and effort) and scalable, especially as they aim to reach a wider public that lacks both experience and interest in verifying information.

Analyzing information also needs to be easy to replicate by anyone. Verification based on a similitude with known false narratives is easy and quick to apply, even without much experience. However, debunked narratives must be better known by the general public. This could be achieved by promoting the practice of factchecking and including descriptions of known false narratives in media literacy resources.

Appeal to emotions

Misinformation usually aims to agitate, arouse anger, or trigger a defensive reaction among readers. Content that makes outrageous or shocking claims is more likely to be manipulative.

The appeal to emotions often comes at the expense of actual information. Thus, one way to check against the emotional impact of content is to ask what have we learned from this content? Does it describe/explain specific events, concepts, mechanisms or is it limited to emotional triggers?

One particular way influencers employ the appeal to emotion technique is through loaded words – synonyms or alternate words chosen to replace mainstream terms specifically to provoke the emotional impact that the author wants to achieve (e.g. using “muzzle” instead of face-mask, or “serum” instead of vaccine). For instance, a Facebook post from the Romanian lawyer, Gheorge Piperea, claimed that during a meeting of the World Economic Forum, an “octogenarian lady” stated that we need to reduce the Earth’s population ten times. The text highlights the author’s personal reaction toward this alleged statement: he underlines the advanced age of the participants by calling them “senile” and also uses words such as “crazy” and “evil” to describe them. The post does not offer information that would allow the contents to be verified (e.g., who made the statement and when, or what were the actual words that they used). Rather, the post simply claims to expose an evil plan to kill 90% of the people in the near future, which is designed to instigate fear and anger in readers.

Faulty logic

Some posts are full of known logical fallacies or are built on premises that offer no proof or jump from premises to conclusions without clarifying the reasoning behind it.

Contradictions and logical errors are important markers of misinformation, since one of its main drivers is “ideological blindness” (i.e., the tendency to ignore information that does not confirm the adopted ideology).

This criterion is more difficult to apply, since it requires knowledge of common logical fallacies, such as:

lack of evidence - making claims without providing any evidence or proof;

confusing causality and correlation – for example, eating ice-cream and getting a sunburn might have some correlation, but this doesn’t mean eating ice-cream causes sunburn.

the “strawman” - responding to ideas or statements that no one supports.

Besides obvious logical fallacies, some less evident features of faulty logic should be mentioned as well:

Appeal to irrelevant authority

Promoting a narrative based on the notoriety of a public figure who supports it. Climate deniers, for example, often use Ivar Giaever’s position against climate change as proof, solely because he is a physicist who was awarded the Nobel Prize. However, Giaever received this award in 1973, never studied climate science and actually declared (in 2012) that he “is not that interested in global warming.”[2] Similarly, we sometimes see various anti-vaccination doctors being touted as “the best in the world,” with the implication that we should listen to them, when, in fact, studies on vaccines are more important than the opinion of individual doctors.Exaggerated minority

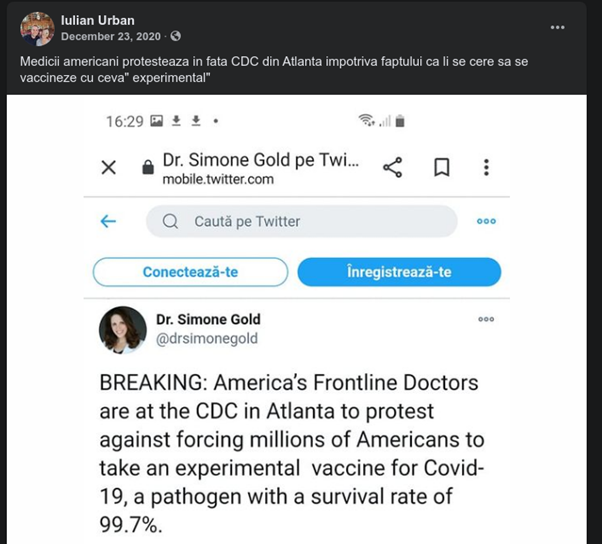

Taking a small number of vocal individuals and claiming they are representative of a larger group or an entire profession. The image below illustrates this approach: the text in Romanian states “American doctors protest in front of Atlanta CDC against being asked to take experimental vaccines.” However, the organization featured in the post is not an association of physicians, but a political organization that has a small number of physicians as members.[3]

Statistical manipulation

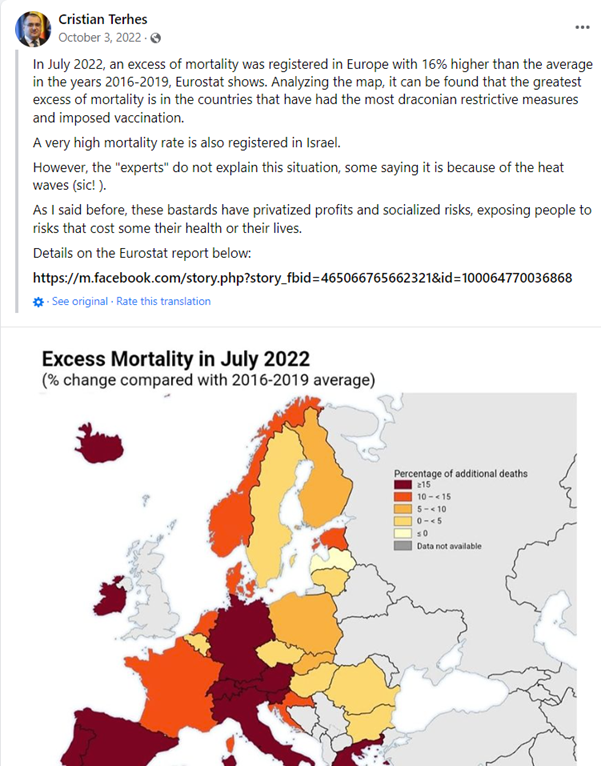

Selecting only those data points that support a particular narrative, while ignoring the wider set of data available (i.e. cherry picking or the Texas sharpshooter fallacy). As shown in the screenshot below, Romanian MEP Christian Terheș claimed that countries with more measures designed to protect public health and higher vaccination rates have had higher excess mortality rates. However, Terheș selected one particular month and, even for that month, ignored the countries that contradict his claim (such as Latvia, Sweden, and the Czech Republic).

Representation of facts

Social media posts are generally opinions, interpretations of facts or events. However, even opinions should be based on correctly represented facts or events, which is not the case for misinformation. In some cases, false narratives are based on facts or events that are completely made up, but most often, misinformation is based on slight, subtle changes that can be hard to pinpoint.

Many such posts start from declarations made by public figures that are cut or taken out of context in order to support false narratives. For example, in May 2022, a viral video claimed that Albert Bourla (CEO Pfizer) advocated for reducing the world’s population by 50% before 2023. In reality, as Politico has shown, the speech was about reducing the number of people who can’t afford vaccines.

This criterion is the difficult to apply, since it requires identifying all the facts mentioned in the contentwhich are sometimes integrated in the discourse in a way that makes this difficult. It also requires that the facts are validated through multiple sources. On the other hand, this criterionis the strongest indicator of misinformation - any content that includes false information is by definition problematic, no matter what the author’s intentions were.

Conclusion

By using the NALF model (Narratives - Appeal to Emotion – Logic – Facts), it is possible to systematically check social media content in order to assess the likelihood that it contains misinformation.

The four criteria are indicators that should not be considered as binary (yes or no) but as a scale that can be used to compute a score that shows the probability that a piece of content has the potential to manipulate readers.

💡 Interested in learning more about misinformation? Enroll in our free, self-paced course on Countering Disinformation today!

Background illustration: Photo by Andrii Yalanskyi from Adobe Stock license

[1] https://www.hopkinsmedicine.org/health/conditions-and-diseases/coronavirus/covid-19-vaccines-myth-versus-fact

[2] https://skepticalscience.com/ivar-giaever-nobel-physicist-climate-pseudoscientist.html

[3] https://www.sourcewatch.org/index.php?title=America%27s_Frontline_Doctors