AI is sexy, AI is cool. […] AI is our final invention, AI is a moral obligation. AI is the buzzword of the decade, AI is marketing jargon from 1955. AI is humanlike, AI is alien. […] AI will increase abundance and empower humanity to maximally flourish in the universe. AI will kill us all.

These are fragments of the introductory paragraph of Will Douglas Heaven's article in MIT Technology Review, published on July 10, 2024, under the title "What is AI? Everyone thinks they know but no one can agree. And that’s a problem". From techno-utopia to techno panic and anything in-between, reflecting the sentiments, concerns, and perceptions regarding artificial intelligence (AI) of the zeitgeist (the spirit of the time).

And after this indisputably catchy intro to his essay on the topic, the Senior Editor for AI at MIT tech magazine provides a short and clear definition of the question asked in the headline. Only to prove that in reality, it would be hard if not impossible to formulate a general definition representing and explaining all the aspects or possible uses and challenges stemming from the mass penetration of AI in our lives.

This article will not try to forge a better or more comprehensive one but to look at specific types and usage of AI-powered technology – large language models (LLMs) that can mimic the way we talk and write – from the perspective of media literacy. A plenitude of definitions, dictionary meanings, and metaphors can be found with a good old Google search or a visit to Wikipedia where ChatGPT was the most searched topic in 2023 (in English), with 49 million page views. But as with all research quests, media literacy in practice, and as WD Heaven demonstrates, the ability to formulate good questions and seek answers critically (and self-critically) is the path to finding answers, however temporary.

After all, as Alvin Toffler said:

The illiterate of the 21st century will not be those who cannot read and write, but those who cannot learn, unlearn, and relearn.

Conceptual framework

Becoming synonymous with AI (similar to well-known brands associated with a type of product), ChatGPT has gained significant public interest due to extensive media coverage and the rapid adoption of generative AI technologies. This interest is notable despite the many diverse varieties of AI and AI chatbots available across various sectors, including education and business. It underscores its potential to transform numerous fields, driven by its capabilities and accessibility.

Media history provides valuable context for understanding GAI as part of longer trajectories of media innovation and disruption. ChatGPT's adoption rate has been extraordinarily rapid, outpacing both traditional media like television, and even fast-growing digital platforms like TikTok and Instagram. Its growth from launch to 100 million users in just two months is unprecedented in media history.

This rapid adoption and potential impact of generative AI (GenAI, or GAI) technologies, like ChatGPT, highlights the immense interest it raises and its potential impact, following the usual pattern as with every new media. It evokes both enthusiasm and technological utopianism because of its manifested and potential beneficial uses. At the same time, legitimate concerns about its ability to exacerbate present societal issues, like the generation and dissemination of false and misleading information, trigger techno and moral panic.

According to IBM Research, GAI refers to deep-learning AI models that can generate high-quality content of different kinds and forms based on the data they were trained on. It does so in response to a submitted prompt by learning from a large reference database of examples, Merriam-Webster Dictionary would tell you.

But why, based on what assumptions and arguments should we look at LLMs from the lens of media studies (MS) and media literacy (ML)? And what are the implications for media literacy in the context of generative AI?

Let’s outline some of the most important or the most obvious reasons.

The impact of LLMs on media and audiences

GAI and LLMs are fundamentally transforming how media content is created, distributed, and consumed, shaping cultural production and representation. They are already altering workflows in newsrooms and media organizations. Media studies' interdisciplinary nature allows for integrating technical, social, and humanistic perspectives on GAI. MS provides frameworks to analyze these shifts in the media ecosystem and help examine the implications of AI-generated content on journalism, entertainment, and other media sectors. MS approaches can also examine how GAI may reinforce or disrupt existing power structures in the media industry.

Similarly to algorithmically driven recommendation systems, like those of YouTube or TikTok, LLMs enable highly personalized content recommendations and interactions. Media studies concepts around audience reception and engagement are relevant for understanding these dynamics.

There are concerns about GAI's potential to generate convincing misinformation at scale. New competencies are needed to understand and critically engage with AI-generated media. Media literacy frameworks are crucial for equipping people to critically evaluate both human-produced and AI-generated content, and that is why media literacy education needs to evolve to address GAI.

An illustration generated by Dall-e 3 via Bing with the prompt Minimalistic 3D digital art showing the evolution of media and communication from written speech to generative AI specifically for this article.

An illustration generated by Dall-e 3 via Bing with the prompt Minimalistic 3D digital art showing the evolution of media and communication from written speech to generative AI specifically for this article.

In essence, media studies and media literacy offer established theoretical, and methodological tools that can be applied to critically examine the complex ways GAI and LLMs are reshaping media, while also evolving to address the unique challenges posed by these technologies. This approach can provide nuanced insights, beyond some purely technical analyses.

And that is what this article invites you to do.

ML approach to LLMs

Media literacy education is the ongoing development of habits of inquiry and skills of expression necessary for people to be critical thinkers, thoughtful and effective communicators, and informed and responsible members of society.

Among tens of definitions for media literacy, this one, articulated by the National Association of Media Literacy Education, is especially relevant to the practice of interacting with LLM-powered chatbots like ChatGPT, Perplexity, Mistral, and Gemini and the potential through it to build ML-related competences. Both the exercise of media literacy and the retrieval of information from GAI through a prompt in a dialogue-like manner require the ability to formulate questions, to question the answers and your interpretations, to dissect the conclusions you get to, and to assume that you may have fooled yourself, either because of a convincingly presented claim, missing pieces of information or because of the human propensity to seek confirmation (known as confirmation bias).

What LLM-powered chatbots allow and what is especially valuable from a media literacy perspective, is to experiment and compare: what answers to the same question I will get from different chatbots; how answers will vary if I am asking questions with different wording, providing different context, directing the LLM into different fields and objectives, or changing their temperature. Think about the prompt as a sandbox for improving language and analytical skills.

Chatbots like Perplexity (an AI-powered search engine and conversational assistant designed to provide real-time answers to user queries using natural language processing and LLMs) are good in the interpretation of meanings of single words, phrases, and semantic analysis: denotative (literal) and connotative (associative) meaning of words and expressions. Yet, despite the advancements in semantic analysis for LLMs, the ambiguity in human language is still one of the main challenges. While these models are good at “understanding” the syntax and semantics of language, they often struggle with tasks that require an understanding of the world beyond the text.

While not as advanced as their semantic capabilities, LLMs can perform some level of semiotic analysis. They may not do well with highly abstract or culturally specific symbols, and their analysis may not always capture the full depth or nuance of symbolic relationships. But even this limitation is useful when we are aware of it - we humans also have our limitations in interpreting rhetorical figures, signs, and symbols when we lack the necessary knowledge and context.

Generated by Dall-e 2 in early 2023 in response to the following prompt by the author: Salvador Dali paints a portrait of the transformer language model Dall-E in a surrealistic futuristic visual style.

Generated by Dall-e 2 in early 2023 in response to the following prompt by the author: Salvador Dali paints a portrait of the transformer language model Dall-E in a surrealistic futuristic visual style.

In recent years, media literacy has largely become associated with (and unfortunately narrowed down to) news literacy and, more specifically, fact-checking. And what is fact-checking if not the comparison between different sources, looking for the most reliable and relevant ones, so that claims presented as facts can be verified or refuted, and statements contextualized? This process could be practiced by prompting LLMs as well. Especially “playing” with Perplexity, presented by Kevin Roose in his New York Times article from February 2024 titled "Can This A.I.-Powered Search Engine Replace Google? It Has for Me." While he claims how impressed he is after testing Perplexity for weeks, the author, journalist, and technology columnist for The New York Times also confesses his fear that A.I. search engines could destroy his job. And that the entire digital media industry could collapse as a result of products like that.

What sets Perplexity apart and has been a key feature since its launch is that it generates answers not just based on the data on which it is trained but it makes web search, and its answers are annotated with links to the sources the A.I. used, along with a list of suggested follow-up questions. (Disclaimer: the author is conducting her tests mainly with Perplexity Pro, finding it the most suitable for her work, but declares that she does not have any interactions or connections with the company behind this product). It supports a list of different AI models: proprietary AI model optimized for fast search by Perplexity, Claude 3 Opus and 3.5 Sonnet, Sonar Large trained by Perplexity based on LLaMa 3, and the advanced GPT-4o model by OpenAI.

Here is an exercise you could do to test how an LLM-powered chatbot could assist you in doing a basic fact check:

Open in two adjacent tabs of your browser Perplexity and ChatGPT (OpenAI now allows limited use of the premium GPT 4o without a paid subscription). Write down a prompt instructing the chatbot to compare the adoption rate of chatbot-type generative AI like ChatGPT to the adoption of legacy and new media like social media platforms. After it returns a response, ask it to analyze, identify, and list all the claims for facts it made in a paragraph of your choice. On a turn round, ask the model to fact-check (look for and cite at least two reliable sources for every claim) all the claims it listed.

Why should this matter?

From my experience working with undergraduate students and interacting with newcomers or relatively inexperienced editors on Wikipedia, which I am a regular contributor to, and use as a medium and tool for developing media literacy, I can say that many of them find it difficult to start writing texts with proper and strict citing of reliable and appropriate sources. Perplexity also allows users to search within a specific set of sources (academic, video, social, etc.), to see the sequence of tasks examined by the model in the process of generating answers (for the Pro version), to generate images, using Dall-e 3, and to extract, analyze and summarize data and information from attached files in a few different formats (images, texts or PDFs).

While keeping in mind that A.I.-powered search engines still make mistakes and could "hallucinate", having an environment such as the one provided by Perplexity, allows educators to demonstrate and practice the preparation for conducting a study. This includes the identification of scientific fields that covered the topic of interest, doing a literature review, generating a pool of ideas, articulating hypotheses, considering different methods, outlining a structure, and preparing a plan (sequence of steps, timeline, schedule). This knowledge, skills, and habits are important for students in general, but also in practicing media literacy for all types and forms of media content.

What else could you do with LLM-powered chatbots without engineering education and knowledge (with all the caveats that the results may contain minor errors, partial untruths, and absolute fabrications while acknowledging that this risk is not limited to GAI)?

check if you will find the answer to your question in a specific source,

extract and interpret data from images, videos, information, tables, and databases,

make basic statistical analysis or learn how statistics work,

check, correct, and explain natural or programming languages,

analyze human behaviors (including vulnerability to logical fallacies, emotions, and predispositions).

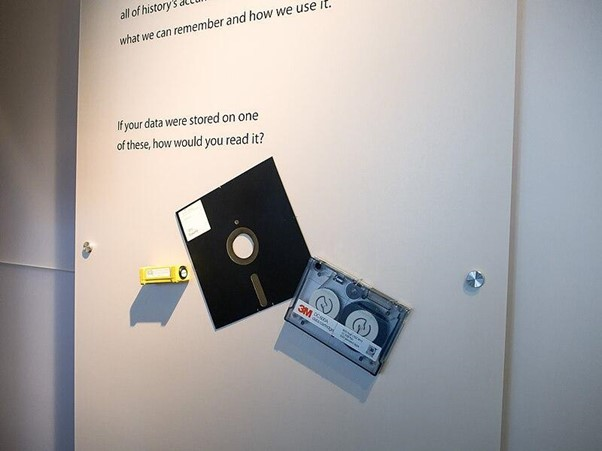

Old digital media - Computer History Museum. Author: Ik T from Kanagawa, Japan. 5 November 2016. License: CC BY Published and free to share and remix with attribution on Wikimedia Commons.

Old digital media - Computer History Museum. Author: Ik T from Kanagawa, Japan. 5 November 2016. License: CC BY Published and free to share and remix with attribution on Wikimedia Commons.

A note by the author: The design, training, and use of generative artificial intelligence involve ethical considerations and issues that should be taken into account (privacy, copyright, and sustainability to name a few). Due to the limitations of the volume and scope of this article, it does not address these issues but acknowledges and highlights their importance.

In addition to the publications cited in this article, its creation has been influenced by recent reading of the following few articles, which may be regarded as recommended reading for those interested:

Prompt Engineering Fundamentals: what Prompt Engineering is, why it matters, and how we can craft more effective prompts for a given model and application objective

OECD Truth Quest Survey (published in June 2024). Organization for Economic Co-operation and Development’s Truth Quest contributes to the statistical literature on measuring false and misleading content by providing cross-country (21, mostly English, French, German, or Spanish speaking) comparable evidence on media literacy skills by theme, type, and origin (generated by humans or AI). It assesses the effect of AI labels on people’s performance and offers insights into where people get their news, as well as people’s perceptions about their media literacy skills, among other issues.

Background illustration: Generated by Dall-e 2 in early 2023 in response to the following prompt by the author: Salvador Dali paints a portrait of the transformer language model Dall-E in a surrealistic futuristic visual style 2

Author: Iglika Ivanova

Are you using LLM-powered chatbots in other ways? Would you like to share your experiences - whether positive, negative, or controversial? Please send me a message to iglika@mediapolicy.eu - I will be grateful and delighted to hear from you.